ML and AI Blogs | Issue# 7 [August 24, 2024]

There are multiple applications of Natural Language Processing (NLP) – the field that uses ML and AI to process and utilize language data and text. Applications include information retrieval, machine translation, language modeling, sentiment analysis, text summarization, chatbots, question-answering systems, and more. This blog will focus on solving a sentiment analysis problem (posed as a classification task) with Recurrent Neural Networks (RNNs) using TensorFlow. It is worth noting that this blog takes off from our earlier blog addressing the same problem using a simple neural network.

Introduction

We will use the Sentiment140 dataset (the same dataset was used in our earlier blog) to classify the sentiment of Twitter messages using RNNs. While Twitter has been rebranded to X, the messages in the dataset are from 2009, when Twitter was Twitter and tweets were limited to 140 characters. We will therefore refer to X as Twitter in this work. Sentiment140 allows us to discover the sentiment of a brand, product, or topic on Twitter.

Each of the training and test data comprise of a CSV file with emoticons removed. More here.

Note: The TensorFlow dataset link does state that the labels included in the dataset are 0 (negative), 2 (neutral), and 4 (positive). However, the training dataset currently contains the labels 0 (negative) and 4 (positive). The test dataset additionally contains the label 2 (neutral), apart from 0 (negative) and 4 (positive).

Data and Computing Resources

We will first go ahead and download the data and upload it in Google Drive. Of course, we could use the local Colab environment to store the data. However, the data is roughly of 240 MB in size (~ 1.6 million tweets) and uploading the same to Colab everytime the runtime restarts or is disconnected or is reassigned is not worth the wait.

Additionally, we will require a considerable amount of computing power or RAM for processing the data (text vectorization) and for fitting the RNN model (sentiment classifier), which is not available with the free tier of Colab. We will therefore need to upgrade to Colab Pro or go for Pay As you Go, as we will need to use a GPU or a TPU to process the data and to build the model.

We will be using a TPU in this work, as it is relatively cheaper than the GPUs available in the Colab environment. That can be achieved by navigating as follows.

- Go to Runtime –> Change runtime type –> Select TPU v2 under Hardware accelerator.

We can always monitor the usage of the resources we have selected as follows.

- Go to Runtime –> View resources.

With about ~1.6 million tweets to be used for training the RNN model, we are looking at huge costs in terms of computation and time. To optimize the use of computational resources and to minimize the time and cost required to train our model, we will use distributed computing or parallel processing on a TPU.

Getting Started

Let us load the relevant Python libaries.

import tensorflow as tf

from tensorflow import keras

from tensorflow.keras import layers

from tensorflow.keras.callbacks import EarlyStopping, ReduceLROnPlateau

from google.colab import drive

import pandas as pds

import csv

from sklearn.model_selection import train_test_split

import matplotlib.pyplot as plt

from sklearn.metrics import confusion_matrix

import seaborn as snsFetch and Read the Data

Define the paths to import the training and test data from Google Drive. Additionally, we’ll also create cleaned-up versions of our training and test data and save those in the same folder on Google Drive as the datasets.

# Import the dataset from Google Drive.

drive.mount('/content/drive')

# Define the file paths for the training data and its cleaned-up version.

input_train_file_path = '/content/drive/MyDrive/Datasets/Sentiment140/trainingandtestdata/training.1600000.processed.noemoticon.csv'

output_train_file_path = '/content/drive/MyDrive/Datasets/Sentiment140/trainingandtestdata/cleaned.training.1600000.processed.noemoticon.csv'

# Define the file paths for the test data and its cleaned-up version.

input_test_file_path = '/content/drive/MyDrive/Datasets/Sentiment140/trainingandtestdata/testdata.manual.2009.06.14.csv'

output_test_file_path = '/content/drive/MyDrive/Datasets/Sentiment140/trainingandtestdata/cleaned.testdata.manual.2009.06.14.csv'

Clean the Data

To avoid running into potential issues with the original data, we will go ahead and read the training and test data manually, line-by-line, to identify and filter out the problemactic rows and store the cleaned-up data in a new CSV file.

# Read the training and test datasets line-by-line to identify problematic rows.

# Manually filter out problematic rows and create a clean version of the CSV data file.

def clean_data(input_file_path, output_file_path):

with open(input_file_path, 'r', encoding='ISO-8859-1') as infile, open(output_file_path, 'w', encoding='ISO-8859-1', newline='') as outfile:

reader = csv.reader(infile)

writer = csv.writer(outfile)

for row in reader:

try:

writer.writerow(row)

except csv.Error as e:

print(f'Error processing row: {row}')

continueLet us go ahead and clean the training and test data.

# Clean the training data.

clean_data(input_train_file_path, output_train_file_path)

# Clean the test data.

clean_data(input_test_file_path, output_test_file_path)We will now go ahead and read the new, cleaned-up training and test data.

# Read the training data into a pandas DataFrame.

raw_train_dataset = pds.read_csv(output_train_file_path, na_values='?', sep=',',

skipinitialspace=True, encoding='ISO-8859-1')

# Read the test data into a pandas DataFrame.

raw_test_dataset = pds.read_csv(output_test_file_path, na_values='?', sep=',',

skipinitialspace=True, encoding='ISO-8859-1')Inspect the Data

Let us first confirm the size of the training and test datasets in terms of the number of examples / rows in them.

# Confirm the number of rows / examples in the training dataset.

num_examples_train = raw_train_dataset.shape[0]

print("Number of training examples:", num_examples_train)

# Confirm the number of rows / examples in the test dataset.

num_examples_test = raw_test_dataset.shape[0]

print("Number of test examples:", num_examples_test)

The next step is to investigate the cleaned-up training and test data for further analysis.

# Display the first few rows of the training dataset to verify.

raw_train_dataset.head()

# Display the first few rows of the test dataset to verify.

raw_test_dataset.head()

It can be observed by inspecting the training and test datasets that the first column contains the labels, whereas the last column houses the text (tweets) we are interested in. Let us confirm the unique values in the labels column.

# Fetch and print the unique labels in the training data.

unique_values_train = raw_train_dataset.iloc[:, 0].unique()

print(unique_values_train)

# Fetch and print the unique labels in the test data.

unique_values_test = raw_test_dataset.iloc[:, 0].unique()

print(unique_values_test)

Normalize the Data

We can observe that the training labels comprise of two values, 0 (negative) and 4 (positive). Therefore, we will now go ahead and normalize the training labels so that the 4’s are converted to 1’s (we will use 1 to denote the positive labels). This will help us when we build our model / sentiment classifier to perform binary classification.

# Normalize the training labels.

raw_train_dataset.iloc[:, 0] = raw_train_dataset.iloc[:, 0].apply(lambda x: 0 if x == 0 else 1)

# Normalize the test labels.

raw_test_dataset.iloc[:, 0] = raw_test_dataset.iloc[:, 0].apply(lambda x: 0 if x == 0 else 1)Let us print and confirm the unique labels in the training dataset after performing normalization.

# Fetch and print the unique labels in the training data after performing normalization.

unique_values_train_normalized = raw_train_dataset.iloc[:, 0].unique()

print(unique_values_train_normalized)

It can also be observed that the test dataset is comprised of the labels 0 (negative), 2 (neutral), and 4 (positive). However, we need to ensure that the training and test data are consistent so that we can evaluate our model (a binary classifier) on the classes it was trained on. We will therefore go ahead and filter out the rows from the test dataset with the label 2 (neutral).

# Filter out the rows from the test data with the label 2 (neutral).

filtered_test_dataset = raw_test_dataset[raw_test_dataset.iloc[:, 0] != 2]We will go ahead and take a look at the number of elements in the filtered test dataset.

# Confirm the number of rows / examples in the test dataset after filtering.

num_examples_test_filtered = filtered_test_dataset.shape[0]

print("Number of test examples after filtering:", num_examples_test_filtered)

Let us confirm the unique values in the labels column of the test data once again.

# Fetch and print the unique labels in the filtered test data.

unique_filtered_values_test = filtered_test_dataset.iloc[:, 0].unique()

print(unique_filtered_values_test)

Now that we are sure that the test data comprises of just the labels 0 (negative) and 4 (positive), we will go ahead and normalize the test labels so that the 4’s are converted to 1’s (positive labels).

# Normalize the test labels.

filtered_test_dataset.iloc[:, 0] = filtered_test_dataset.iloc[:, 0].apply(lambda x: 0 if x == 0 else 1)Let us print and confirm the unique labels in the test dataset after performing normalization.

# Fetch and print the unique labels in the test data after performing normalization.

unique_filtered_values_test_normalized = filtered_test_dataset.iloc[:, 0].unique()

print(unique_filtered_values_test_normalized)

Separate out the Labels from the Features

Let us go ahead and extract the labels and the text data from the training and test datasets for further processing and for feeding into our model later.

# Extract the labels (first column) from the training dataset.

train_labels = raw_train_dataset.iloc[:, 0].values

# Extract the text data (last column) from the training dataset.

train_text_data = raw_train_dataset.iloc[:, -1].values

# Display the first few labels and text entries from the training dataset to verify.

print(train_labels[:5])

print(train_text_data[:5])

# Extract the labels (first column) from the test dataset.

test_labels = raw_test_dataset.iloc[:, 0].values

# Extract the text data (last column) from the test dataset.

test_text_data = raw_test_dataset.iloc[:, -1].values

# Display the first few labels and text entries from the test dataset to verify.

print(test_labels[:5])

print(test_text_data[:5])

Distributed Training and Parallelization

As indicated earlier, we would leverage TensorFlow’s distributed computing framework to optimize the use of computational resources and to minimize the time and cost required to train our RNN model. Let us set up TensorFlow to leverage TPU resources for distributed training.

The TPUClusterResolver connects to the TPU system, initializes it, and verifies that the TPU resources are ready and properly configured before starting the training process.

# Initialize the TPU cluster resolver to connect to the TPU system.

resolver = tf.distribute.cluster_resolver.TPUClusterResolver()

# Connect to the TPU cluster using the resolver.

tf.config.experimental_connect_to_cluster(resolver)

# Initialize the TPU system to prepare it for training.

tf.tpu.experimental.initialize_tpu_system(resolver)

We will now define a strategy to distribute the training across TPU cores, aiming to accelerate and optimize the training process. It is worth noting that in this particular case, distributed computing using the TPUStrategy reduces the training time by a factor of hundreds, as compared to the time required to train the model without implementing parallelization.

# Step 2: Define the distribution strategy to distribute the training across the TPU cores.

strategy = tf.distribute.TPUStrategy(resolver)Prepare the Data

We will now work on preprocessing the text data to be fed into our RNN model / sentiment classifier.

Apply Text Vectorization

Let us first understand more a few important terms.

Standardization refers to preprocessing the text, typically to remove punctuation or HTML elements to simplify the dataset. Tokenization is the process of splitting strings into tokens (e.g., splitting a sentence into individual words by splitting on whitespace) and each token is a feature of the text data. Vectorization converts tokens into numbers so they can be fed into a neural network. All of the aforementioned tasks can be accomplished with the tf.keras.layers.TextVectorization layer that we would be using to preprocess the text data.

Before applying the TextVectorization layer and proceeding with tokenization of the training and test text data, we will convert the data into strings, as the TextVectorization layer in TensorFlow / Keras expects input data to be in the string format.

# Convert all text data to strings.

train_text_data = train_text_data.astype(str)

test_text_data = test_text_data.astype(str)Now, we are finally ready to standardize, tokenize, and vectorize the text data using the TextVectorization preprocessing layer. To facilitate the same, we will adapt the TextVectorization layer to the training data (fit on the training data). This will cause the model to build a vocabulary – an index of strings to integers.

# Maximum number of unique tokens or features to retain in the vocabulary.

max_features = 20000

# Maximum number of tokens of each input sequence / example to be processed.

sequence_length = 64

# Define the TextVectorization layer.

vectorize_layer = layers.TextVectorization(

standardize='lower_and_strip_punctuation',

max_tokens=max_features,

output_mode='int',

output_sequence_length=sequence_length)

vectorize_layer.adapt(train_text_data)We will now go ahead and split the training data into training and validation sets. We will use 20% of the training data for validation.

# Split the training data into training and validation data.

text_train, text_val, labels_train, labels_val = train_test_split(train_text_data, train_labels, test_size=0.2, random_state=42)As the final preprocessing step, let us go ahead and apply the TextVectorization layer to the training, validation, and test data, and take a look at the vectorized data.

# Apply TextVectorization to standardize, tokenize, and vectorize the training data.

text_train_vectorized = vectorize_layer(text_train)

# Visualize the training data.

print("Vectorized Training Data:\n", text_train_vectorized.numpy())

# Apply TextVectorization to standardize, tokenize, and vectorize the validation data.

text_val_vectorized = vectorize_layer(text_val)

# Visualize the validation data.

print("Vectorized Validation Data:\n", text_val_vectorized.numpy())

# Apply TextVectorization to standardize, tokenize, and vectorize the test data.

text_test_vectorized = vectorize_layer(test_text_data)

# Visualize the test data.

print("Vectorized Test Data:\n", text_test_vectorized.numpy())

As mentioned earlier, vectorization replaces each token by an integer. We can lookup the token (string) that each integer corresponds to by calling .get_vocabulary() on the layer. Let us check a couple of random entries from the vocabulary and also get an idea about the size of the vocabulary.

# Check a few vocabulary entries.

print("872 ---> ",vectorize_layer.get_vocabulary()[872])

print("1729 ---> ",vectorize_layer.get_vocabulary()[1729])

print("3391 ---> ",vectorize_layer.get_vocabulary()[3391])

print('Vocabulary size: {}'.format(len(vectorize_layer.get_vocabulary())))

Configure the Data for Performance

Firstly, we will have to convert our data into a TensorFlow dataset. This step ensures efficient data handling, allows for batching, and integrates seamlessly with TensorFlow / Keras models, resulting in faster and more effective training.

There are two important methods that should be used when loading data to make sure that I/O does not become blocking.

cache()keeps data in memory after it’s loaded off disk. This will ensure the dataset does not become a bottleneck while training the model.prefetch()overlaps data preprocessing and model execution while training.

More on both of the aforementioned methods, as well as how to cache data to disk in the data performance guide here.

# Batch size to be used for training.

batch_size = 1024

# Define the buffer size for shuffling the dataset.

buffer_size = 10000

# Automatically tune the buffer size for optimal data loading performance.

AUTOTUNE = tf.data.AUTOTUNE# Create TensorFlow datasets.

train_ds = tf.data.Dataset.from_tensor_slices((text_train_vectorized, labels_train))

val_ds = tf.data.Dataset.from_tensor_slices((text_val_vectorized, labels_val))

test_ds = tf.data.Dataset.from_tensor_slices((text_test_vectorized, test_labels))

# Batch the datasets.

train_ds = train_ds.batch(batch_size)

val_ds = val_ds.batch(batch_size)

test_ds = test_ds.batch(batch_size)

# Cache, shuffle, and prefetch the datasets to improve performance.

train_ds = train_ds.cache().shuffle(buffer_size).prefetch(buffer_size=AUTOTUNE)

val_ds = val_ds.cache().prefetch(buffer_size=AUTOTUNE)

test_ds = test_ds.cache().prefetch(buffer_size=AUTOTUNE)# Automatically tune the buffer size for optimal data loading performance.

AUTOTUNE = tf.data.AUTOTUNE

# Cache and prefetch the datasets to improve performance.

train_ds = train_dataset.cache().prefetch(buffer_size=AUTOTUNE)

val_ds = val_dataset.cache().prefetch(buffer_size=AUTOTUNE)

test_ds = test_dataset.cache().prefetch(buffer_size=AUTOTUNE)The shuffle() function in the data pipeline randomizes the order of the elements in the dataset. This helps prevent overfitting of the data and improves the generalization capabilities of the model.

Build and Train the Model

We will now go ahead and define our binary classifier – a RNN model, and optimize the training process. Before that, let us take a quick look at what a RNN is and also define Long Short-Term Memory (LSTM) – a type of RNN that we would be using to create our model.

RNN and LSTM

- RNN: RNNs are a class of neural networks designed for processing sequential data, such as time series, natural language, or any data where the order of the input matters. RNNs have loops that allow information to persist in the network, enabling them to handle sequences of varying lengths.

- LSTM: LSTM is a specific architecture within the RNN family that was developed to address the limitations of traditional RNNs, particularly the issue of long-term dependencies. Traditional RNNs struggle to maintain relevant information over long sequences due to problems, such as vanishing or exploding gradients. LSTMs introduce a more sophisticated memory cell and gating mechanism (input gate, forget gate, and output gate) that help the network decide which information to retain, update, or discard. We would use LSTM in this work to create the RNN model.

Define the Model

Let us define the embedding dimension for our neural network model, i.e., the length of the vector representing each word or token in the vocabulary. We will also use L2 regularization to prevent overfitting of the data.

# Embedding dimension.

embedding_dim = 16

# Define a kernel regularizer to prevent overfitting the data.

regularizer = tf.keras.regularizers.L2(0.01)We will build and compile the RNN model with our distributed TPU training strategy by using the function strategy.scope(). The vectorized data will be passed into the RNN model. The model has the following layers.

- The

Embeddinglayer is used to transform the input tokens into dense vectors, learn the word or token representations from the training data, and to map the high-dimensional sparse input space into a lower-dimensional dense space. These vectors are trainable. After training (on enough data), words with similar meanings often have similar vectors. Settingmask_zero=Truespecifies that the embedding layer should ignore padding tokens (which are typically represented by zeros) in the input sequences. - A

LSTMlayer processes sequence input by iterating through the elements. RNNs pass the outputs from one timestep to their input on the next timestep. Settingreturn_sequences=Trueensures that the output is a sequence of vectors, one for each successive time step in the input sequence. The output will have the same sequence length as that of the input, so it can be passed to another RNN layer, which is exactly what we are doing.TheBidirectionalwrapper is being used with the RNN layer. This propagates the input forward and backwards through the RNN layer and then concatenates the final output.- Advantage of a Bidirectional RNN: The signal from the beginning of the input doesn’t need to be processed all the way through every timestep to affect the output.

- Disdvantage of a Bidirectional RNN: The main disadvantage of a bidirectional RNN is that we cannot efficiently stream predictions as words are being added to the end.

Dropoutis used to regularize the model by randomly setting a fraction (50% here) of its input units to 0 during training to prevent overfitting.- After the RNN has converted the sequence to a single vector, the

Denselayer with ReLU activation reduces the dimensionality of theLSTMoutput, condensing the high-dimensional data into a more compact, low-dimensional representation. It also extracts and emphasizes key, relevant features, providing a refined, intermediate representation of the data. - The final layer is a single-unit

Denselayer with a sigmoid activation function for binary classification (outputs a probability of a class or label, i.e., positive or negative sentiment).

L2 regularization has been applied to the LSTM and Dense layers. L2 regularization is a type of kernel regularizer that is applied to the kernel (weights) to prevent the model from overfitting the data by penalizing large weights of the model.

Let us go ahead and train our model and fit it to the training data and validate it on the validation data.

# Define the scope for distributed training using the TPUStrategy.

with strategy.scope():

model = tf.keras.Sequential([

# Embedding layer: Converts word indices into dense vectors.

layers.Embedding(max_features, embedding_dim, mask_zero=True),

# First Bidirectional LSTM layer: Processes the sequence in both directions.

tf.keras.layers.Bidirectional(tf.keras.layers.LSTM(64, return_sequences=True, kernel_regularizer=regularizer)),

# Dropout layer: Randomly sets the outputs of 50% of the input units / neurons to 0 to prevent overfitting.

tf.keras.layers.Dropout(0.5),

# Second Bidirectional LSTM layer: Processes the sequence and returns the final state.

tf.keras.layers.Bidirectional(tf.keras.layers.LSTM(32, kernel_regularizer=regularizer)),

# Dense layer: Fully connected layer with 64 units to further refine the features.

tf.keras.layers.Dense(64, activation='relu', kernel_regularizer=regularizer),

# Dropout layer: Further prevents overfitting by randomly dropping 50% of the units.

tf.keras.layers.Dropout(0.5),

# Output layer: A single neuron for binary classification.

tf.keras.layers.Dense(1)

])# Investigate the model.

model.summary()

Select the Loss Function, Optimizer, and Evaluation Metric

The model needs a loss function and an optimizer for training. Since this is a binary classification problem and the model outputs a probability, we will use the BinaryCrossentropy loss function.

We will go ahead and configure the RNN model to use an optimizer (Adam) and the BinaryCrossentropy loss function. Also, we will start off with a learning rate of 0.0005. Implementing gradient clipping by using clipnorm=1 limits the magnitude of the gradients during backpropagation to prevent large updates that can destabilize training. This is particularly useful in RNNs / LSTMs, where exploding gradients can be an issue. Setting from_logits=True helps extract the logits (raw, unnormalized prediction scores) from the RNN model’s outputs and uses the sigmoid function to convert the logits into normalized probabilities.

Additionally, we will use BinaryAccuracy for the metric of our binary classifier. Given the RNN model will output a probability, we will define a threshold of 0.5. This implies that if the probability output by the model is greater than or equal to 0.5, we will label it as 1 (positive sentiment), while if the probability is less than 0.5, we will label it as 0 (negative sentiment).

Finally, we will compile the RNN model.

# Define the scope for distributed training using the TPUStrategy.

with strategy.scope():

# Compile the model.

model.compile(

optimizer=tf.keras.optimizers.Adam(learning_rate=0.0005, clipnorm=1.0), # Pick the optimizer, learning rate, and implement gradient clipping.

loss=tf.keras.losses.BinaryCrossentropy(from_logits=True), # Select the loss function for the logits (raw prediction scores).

metrics=[tf.metrics.BinaryAccuracy(threshold=0.5)]) # Choose an evaluation metric and a threshold.Define Callbacks

We will set up an EarlyStopping callback to save resources and stop training when there is no improvement in terms of minimizing the loss and improving the accuracy of the RNN model.

# Define an early stopping callback.

early_stopping = EarlyStopping(

monitor='val_loss', # Monitor the validation loss.

patience=3, # The number of epochs with no improvement after which training will be stopped.

restore_best_weights=True # Restore model weights from the epoch with the best value of the validation loss.

)We will also use the ReduceLROnPlateau callback to monitor the validation loss and reduce the learning rate if the validation loss does not improve for a predefined number of epochs.

# Define a callback for reducing the learning rate as required.

reduce_lr = ReduceLROnPlateau(

monitor='val_loss', # Monitor the validation loss.

factor=0.2, # Reduce the learning rate by a factor of 0.2 if the validation loss does not improve for 'patience' epochs.

patience=2, # The number of epochs with no improvement in the validation loss, after which the learning rate will be reduced.

min_lr=0.0001) # Minimum learning rate.Train the Model

Let us go ahead and train our RNN model and fit it to the training data and validate it on the validation data.

# Number of epochs to train the data.

epochs = 50

# Train and fit the model.

history = model.fit(

train_ds,

validation_data=val_ds,

epochs=epochs,

callbacks=[early_stopping, reduce_lr],

verbose=1)

Evaluate the Model

In this section, we will perform the following tasks.

- Evaluate how the RNN model performs with the test data.

- Plot the loss and accuracy of the RNN model with the training and validation data.

- Have the RNN model classify sentiments across some unseen data that we will feed it with.

- Plot the confusion matrix to investigate the RNN model in terms of the false positives and false negatives.

Performance on Test Data

Let us evaluate the RNN model and see how it performs on the test data in terms of the loss and accuracy.

# Evaluate the model in terms of the loss and accuracy on the test data.

loss, accuracy = model.evaluate(test_ds)

print("Loss: ", loss)

print("Accuracy: ", accuracy)

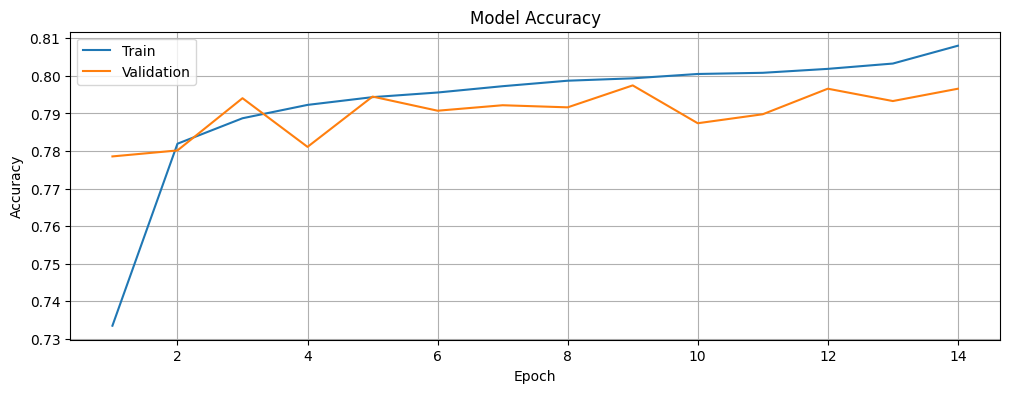

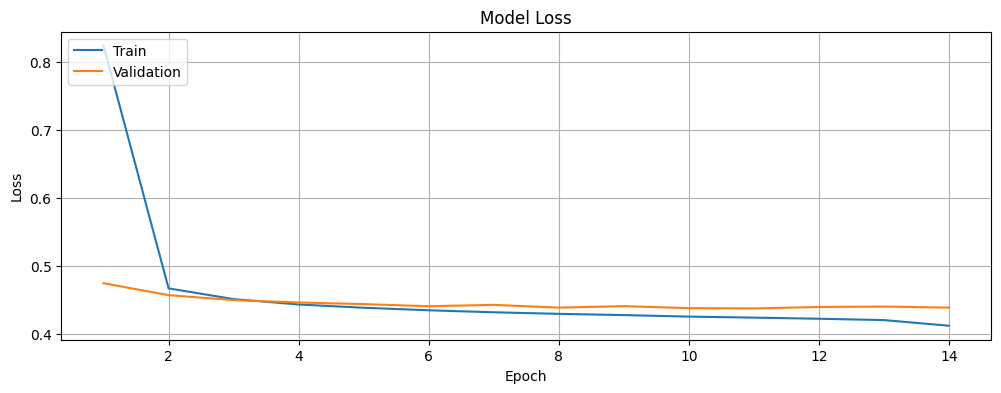

Plot the Training and Validation Loss and Accuracy

Let us plot the accuracy and loss of the RNN model for the training and validation data and investigate if we are overfitting or underfitting the data. The history object contains information about the training process that we will leverage.

history_dict = history.history

# Explore the information about the training process available in the 'history' object.

history_dict.keys()

# Training accuracy.

acc = history_dict['binary_accuracy']

# Validation accuracy.

val_acc = history_dict['val_binary_accuracy']

# Training loss.

loss = history_dict['loss']

# Validation loss.

val_loss = history_dict['val_loss']

# Number of epochs used for training.

epochs = range(1, len(acc) + 1)We will now plot the training and validation accuracy of the RNN model.

# Function to plot the training and validation accuracy.

def plot_accuracy(history):

# Plot the training & validation accuracy values.

plt.figure(figsize=(12, 4))

plt.plot(epochs, acc, label='accuracy')

plt.plot(epochs, val_acc, label='val_accuracy')

plt.title('Model Accuracy')

plt.xlabel('Epoch')

plt.ylabel('Accuracy')

plt.legend(['Train', 'Validation'], loc='upper left')

plt.grid(True)

plt.show()plot_accuracy(history)Let us plot the training and validation loss of the RNN model.

# Function to plot the training and validation loss.

def plot_loss(history):

# Plot the training & validation loss values.

plt.figure(figsize=(12, 4))

plt.plot(epochs, loss, label='loss')

plt.plot(epochs, val_loss, label='val_loss')

plt.title('Model Loss')

plt.xlabel('Epoch')

plt.ylabel('Loss')

plt.legend(['Train', 'Validation'], loc='upper left')

plt.grid(True)

plt.show()plot_loss(history)

Test the Model with Unseen Data

We will feed the RNN model with some random text examples and see how it performs.

sample_examples = tf.constant([

"I am doing well",

"The movie was okay.",

"I have not been keeping well.",

"It's a bright sunny day, let's go fishing!",

"Great!",

"Disaster!",

"I am feeling confident",

"I am not feeling confident",

])

vectorize_layer.adapt(sample_examples)Let us go ahead and define a function that confirms whether the sentiment is positive or negative based on a threshold of 0.5, as mentioned earlier.

# Output the sentiment of the given example based on a threshold.

def interpret_predictions(predictions):

results = []

for pred in predictions:

if pred >= 0.5:

results.append("Positive Sentiment")

else:

results.append("Negative Sentiment")

return results# Vectorize the sample text examples.

vectorized_examples = vectorize_layer(sample_examples)

# Predict the sentiment of the sample text examples.

sample_predictions = model.predict(vectorized_examples)

# Interpret the predicted sentiments of the sample data.

sentiment_results = interpret_predictions(sample_predictions)

for result in sentiment_results:

print(result)

Investigate the probability values of the output predictions of the sample text examples to understand how close or off the predictions of the sentiments are.

print(sample_predictions)

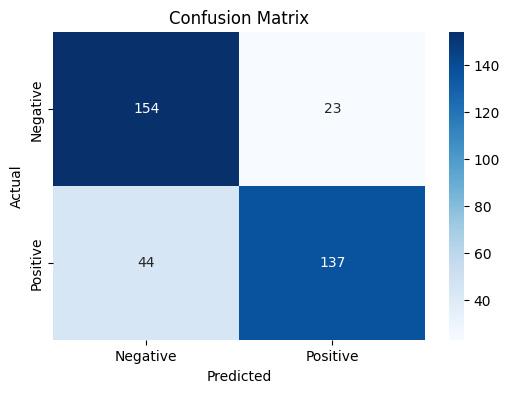

Confusion Matrix

We will also plot the confusion matrix to investigate how the RNN model performs in terms of the false positives and false negatives. Let us first get the test predictions, which are probabilities, and convert them to our labels 0 and 1 based on our threshold of 0.5.

# Predict with the test data.

test_predictions = model.predict(test_ds)

predicted_test_labels = (test_predictions > 0.5).astype("int32")

Next, we will define and plot the confusion matrix based on the labels in the test data and the labels predicted by the RNN model.

# Confusion matrix.

cm = confusion_matrix(test_labels, predicted_test_labels)# Confusion matrix.

cm = confusion_matrix(test_labels, predicted_test_labels)# Plot the confusion matrix.

plt.figure(figsize=(6, 4))

sns.heatmap(cm, annot=True, fmt='d', cmap='Blues', xticklabels=['Negative', 'Positive'], yticklabels=['Negative', 'Positive'])

plt.xlabel('Predicted')

plt.ylabel('Actual')

plt.title('Confusion Matrix')

plt.show()

Thoughts

The RNN model has an accuracy of about 81% and has about 23 false positives and 44 false negatives out of 358 tweets. The numbers may slightly vary across separate occasions of training. However, it is important to note that this is a quick and dirty implementation of a very simple RNN model, and the results are not too bad based on the same.

It is also worth noting that while the training and validation loss decreases with time, the accuracy increases, before almost stabilizing and reaching a stage where there is no further noticeable improvement. Therefore, it is unlikely that we are overfitting or underfitting the data. Although the training accuracy may seem to increase and the training loss may seem to decrease towards the end of the training indicating that the model may be prone to overfitting the training data, they would have eventually stabilized, had we allowed the training to continue by not using EarlyStopping. Additionally, the validation accuracy may seem to be unstable, but would have eventually stabilized had we not been using EarlyStopping. This can be confirmed by looking at the validation loss, that does seem to stabilize even with EarlyStopping.

Next Steps

Of course, there is a definite scope for improvement in this work. There are multiple ways to further develop this dirty implementation and improve the RNN model’s performance / accuracy for the sentiment analysis of the tweets. Some of those are listed below.

- Modifying the Architecture: Adding more fully connected / dense layers or increasing the number of units in each layer can help the model learn more complex patterns. However, to prevent further overfitting of the training data, simplifying the architecture of the model can help instead of adding more layers.

- Hyperparameter Tuning: Experimenting with different learning rates, batch sizes, epochs, etc. can help.

- Regularization: Adjusting dropout rates and the strength of L2 regularization can be used to control overfitting. Other techniques that can be used to prevent overfitting include learning rate warmup, or an advanced technique, such as label smoothing.

- Data Augmentation: Using techniques to augment the dataset can help, examples below.

- Synonym Replacement: Replace words with their synonyms.

- Random Insertion: Insert random words.

- Random Swap: Swap words in a sentence.

- Random Deletion: Delete random words.

- Pre-trained Embeddings: Using pre-trained word embeddings such as GloVe or FastText can improve the performance of the model.

- Ensemble Methods: Using ensemble methods, such as combining predictions from multiple models can improve performance.

- Fine-tuning Pre-trained Large Language Models (LLMs): Using pre-trained LLMs, such as BERT, GPT-4, or similar models from the Hugging Face Transformers library can significantly uplift the performance of word-based models.

- Hyperparameter Optimization: Using libraries, such as Keras Tuner or Optuna to find the best hyperparameters for the RNN model.

Here is the link to the GitHub repo for this work.

Thank you for reading through! I genuinely hope you found the content useful. Feel free to reach out to us at ankanatwork@gmail.com and share your feedback and thoughts to help us make it better for you next time.

Acronyms used in the blog that have not been defined earlier: (a) Machine Learning (ML), (b) Artificial Intelligence (AI), (c) Comma Separated Values (CSV), (d) Megabyte (MB), (e) Random-Access Memory (RAM), (f) Graphics Processing Unit (GPU), (g) Tensor Processing Unit (TPU), (h) Hypertext Markup Language (HTML), (i) Input/Output (I/O), and (j) Rectified Linear Unit (ReLU).